Ling-1T by Ant Group: Everything About This Trillion-Parameter AI Model

Introduction

Artificial Intelligence is evolving super fast, and Ant Group is taking the lead with its latest model, Ling-1T. This trillion-parameter AI model is designed to handle complex reasoning, math problems, and even code generation like a pro. Unlike some closed models out there, Ling-1T is open-source, which means developers and researchers worldwide can explore, tweak, and use it in their own projects. It’s not just another AI—it’s a glimpse into the future of scalable, efficient intelligence.

What Is Ling-1T?

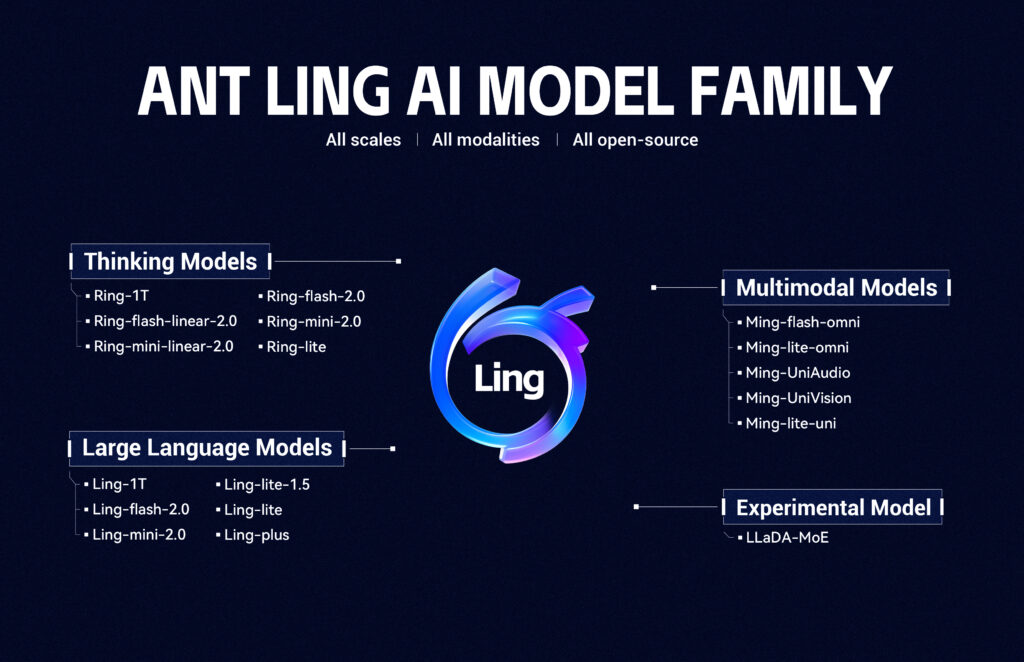

Ling-1T is a flagship model in Ant Group’s Ling AI family, which also includes:

- Ling Series: Non-thinking models built on Mixture-of-Experts (MoE) for general-purpose tasks

- Ring Series: Thinking models designed for reasoning-intensive processes

- Ming Series: Multimodal models that can understand text, images, audio, and video

- Experimental Models: LLaDA-MoE

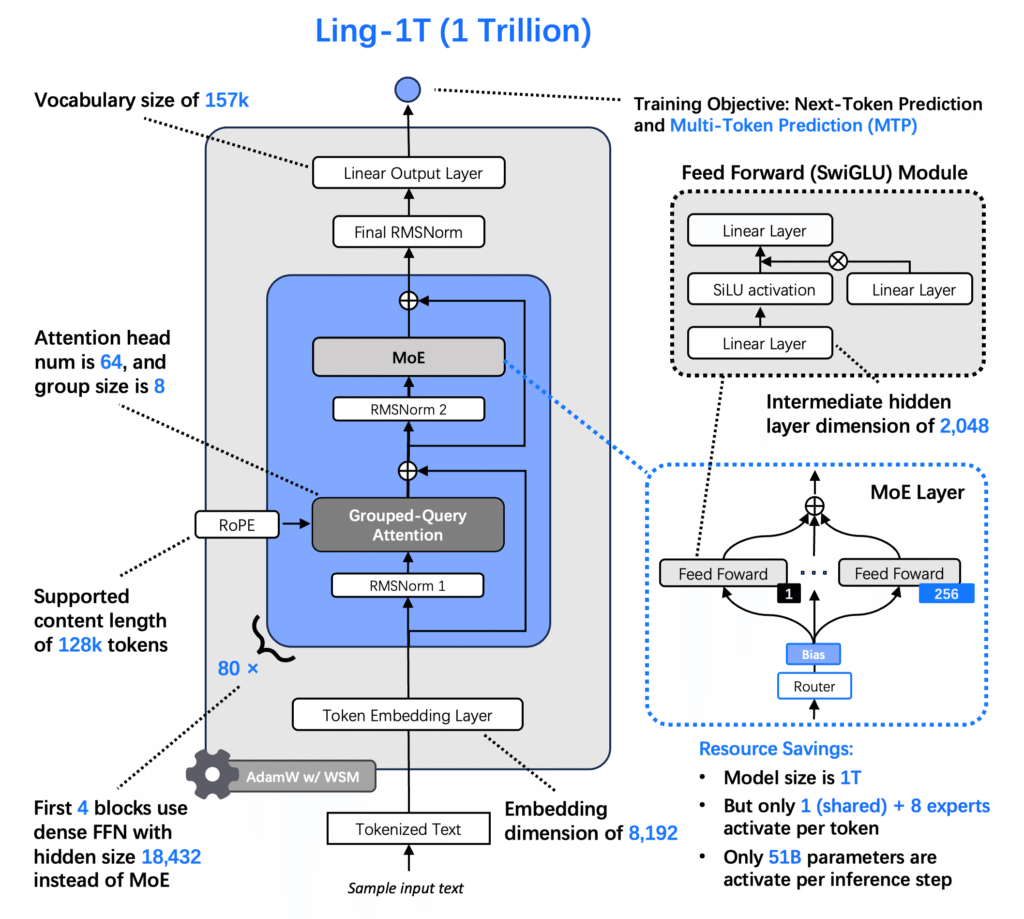

Ling-1T has 1 trillion parameters with around 50 billion active parameters per token. It’s trained on over 20 trillion reasoning-dense tokens, which makes it exceptionally good at handling long contexts and complex logical tasks. Its Evolutionary Chain-of-Thought (Evo-CoT) training method improves both accuracy and reasoning efficiency (businesswire.com).

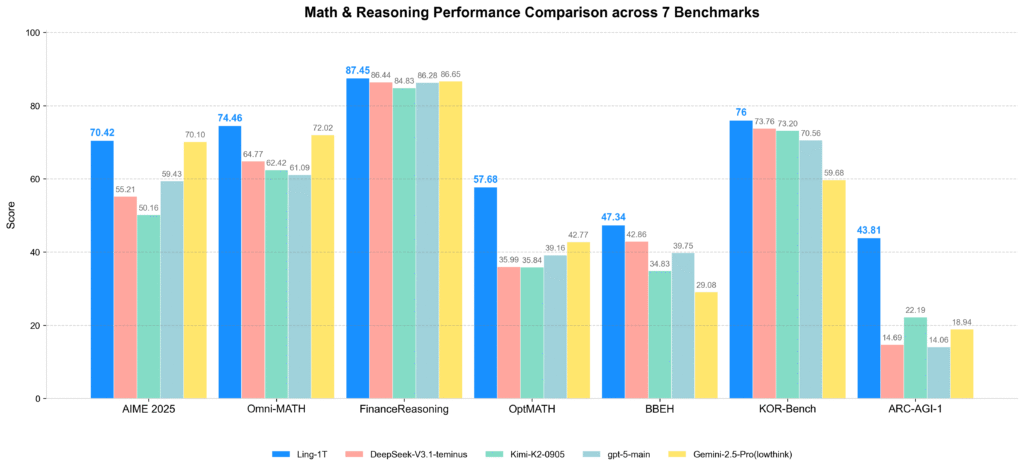

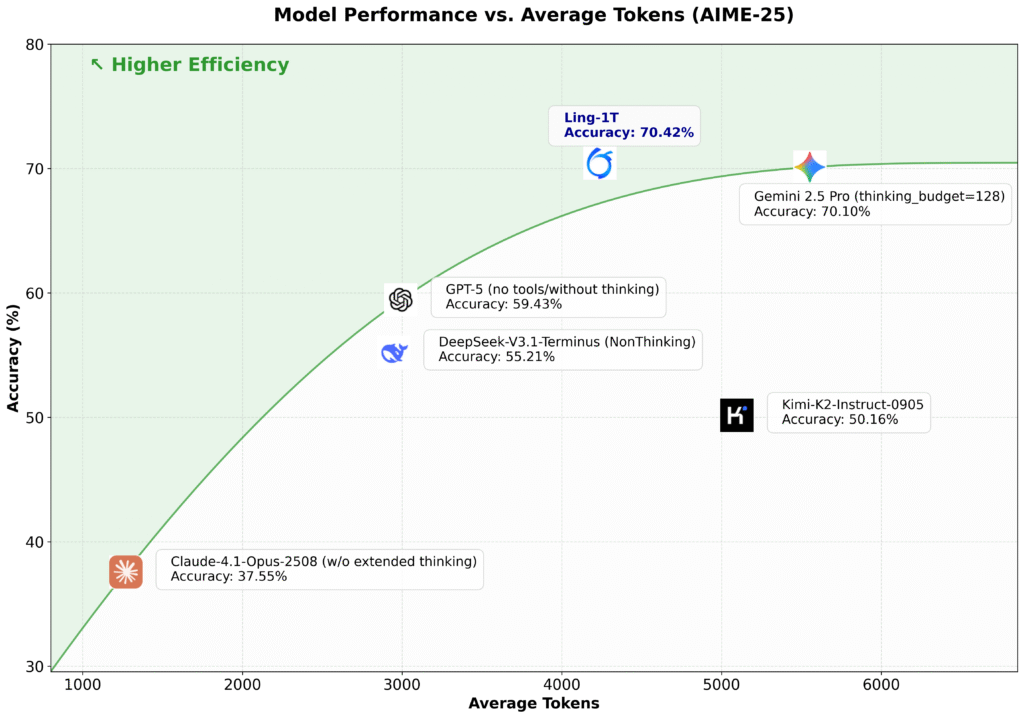

Ling-1T Performance Benchmarks

Ling-1T isn’t just huge—it performs impressively. Some of its notable achievements include:

- AIME 2025: 70.42% accuracy, showing strong performance in competition-level mathematics (businesswire.com)

- ArtifactsBench: Ranks first among open-source models for frontend code generation and visual reasoning (en.tmtpost.com)

These results confirm Ling 1T’s ability to handle tasks that require both deep reasoning and practical application, making it competitive with proprietary models like GPT-5 and Claude 4.5.

Comparison: Ling-1T vs GPT-5 and Claude 4.5

Ling 1T clearly shines because of its open-source nature and strong performance in reasoning and code tasks. Developers get both flexibility and power in one package.

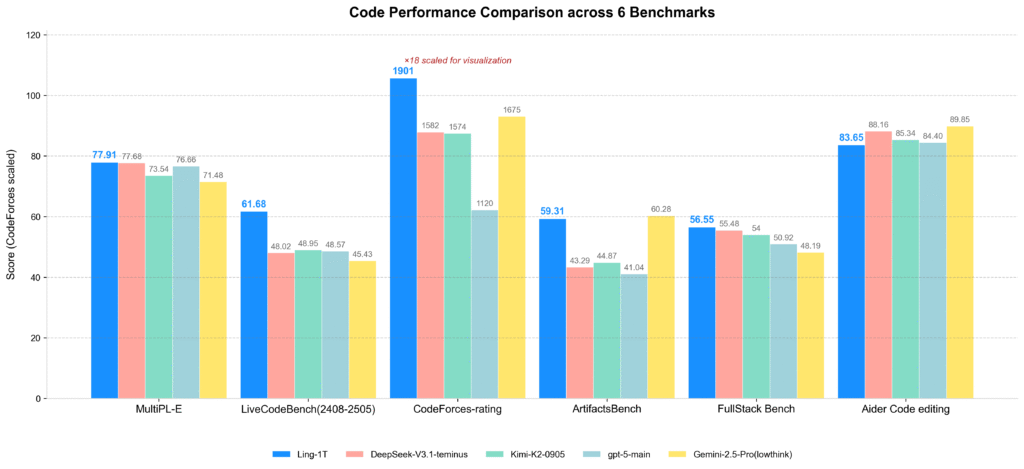

Code Generation Capabilities

Ling-1T’s Strengths:

- Excels in front-end code generation with aesthetic understanding

- Hybrid Syntax-Function-Aesthetics reward mechanism

- Ranks first among open-source models on ArtifactsBench

- Generates cross-platform compatible code with stylistic control

Comparison Insights: While GPT-5 and Claude 4.5 remain powerful closed-source alternatives, Ling 1T offers several advantages for developers prioritizing transparency and customization. The model’s open-source nature allows for fine-tuning specific to organizational needs, something impossible with proprietary APIs.

Reasoning and Tool Use

Ling 1T demonstrates emergent intelligence at trillion-scale, achieving approximately 70% tool-call accuracy on the BFCL V3 benchmark with minimal instruction tuning. This capability emerged despite the model seeing no large-scale trajectory data during training, suggesting powerful transfer learning abilities.

Key Differentiators:

Pros of Ling-1T:

- Complete transparency and open-source accessibility

- Exceptional reasoning efficiency (accuracy per compute unit)

- Customizable for specific domains through fine-tuning

- No API costs or usage restrictions

- Strong emergent capabilities in visual reasoning

Cons of Ling-1T:

- Requires significant computational resources for deployment

- GQA-based attention is stable but relatively costly

- Limited agentic ability compared to specialized models

- Occasional instruction following or role confusion issues

GPT-5 and Claude 4.5 Advantages:

- Easier deployment through API access

- Highly refined instruction following

- Strong multi-turn conversation capabilities

- Robust safety guardrails

Pre-Training at Trillion Scale

The Ling 2.0 architecture was designed from the ground up for trillion-scale efficiency, guided by the Ling Scaling Law (arXiv:2507.17702). This ensures architectural and hyperparameter scalability even under 1e25–1e26 FLOPs of compute.

Key architectural innovations include:

- 1T total / 50B active parameters with a 1/32 MoE activation ratio

- MTP layers for enhanced compositional reasoning

- Aux-loss-free, sigmoid-scoring expert routing with zero-mean updates

- QK Normalization for fully stable convergence

Ling-1T is the largest FP8-trained foundation model known to date. FP8 mixed-precision training yields 15%+ end-to-end speedup, improved memory efficiency, and maintains ≤ 0.1% loss deviation from BF16 across 1T tokens. A fine-grained, heterogeneous 1F1B interleaved pipeline further boosts utilization by 40 %+. System-level optimizations—fused kernels, communication scheduling, recomputation, checkpointing, simulation, and telemetry—ensure stable trillion-scale training.

Applications of Ling 1T

Ling-1T’s abilities are versatile. Some practical use-cases are:

- Software Development: Helps in writing, debugging, and optimizing code

- Education: Can tutor students in math, logic, and problem-solving

- Healthcare: Assists researchers with data analysis and medical research

- Finance: Improves modeling and financial predictions

Its adaptability makes it a solid tool for industries looking for AI that can reason efficiently and provide real results.

Open-Source Accessibility

One of Ling-1T’s biggest advantages is that it’s open-source. It can be downloaded from platforms like Hugging Face and ModelScope, allowing global AI enthusiasts to explore, fine-tune, and integrate the model in their own projects. This move makes Ling-1T not just a product but a collaborative platform for AI innovation (businesswire.com).

Deployment Options

The model supports multiple deployment frameworks:

- vLLM for high-throughput serving

- SGLang with FP8 and BF16 support

- Transformers for direct integration

- ZenMux API for quick experimentation

Technical Requirements

Deploying Ling-1T requires substantial computational resources. The recommended setup includes:

- Multi-GPU configurations with tensor parallelism

- High-memory systems for handling context windows

- Optimized inference frameworks for efficient serving

Learn more about AI model deployment strategies at Previewkart.

Future Prospects of Ling-1T

Ant Group is not stopping here. Future plans for Ling-1T include:

- Improving Agentic Capabilities: Better multi-turn conversation, memory, and tool use

- Enhanced Instruction Alignment: Reducing role confusion and making outputs more consistent

- Efficiency Optimizations: Introducing hybrid attention mechanisms to save computational cost

- Architecture Improvements:

- Hybrid attention mechanisms for improved efficiency

- Reduced computational costs for long-context reasoning

- Enhanced memory management for extended interactions

- Capability Expansion:

- Strengthened agentic abilities for multi-turn interaction

- Improved long-term memory systems

- Advanced tool-use integration

- More robust instruction following and identity consistency

These updates aim to make Ling-1T closer to general intelligence while remaining accessible for everyone in the AI community.

Pros & Cons

Pros:

- Trillion-parameter scale ensures high reasoning and generation ability

- Open-source for research and development

- Strong performance in coding, math, and logical reasoning

- Supports large context lengths up to 128K

Cons:

- Requires high-end computational resources

- Not fully optimized for real-time decision-making

- Occasional instruction-following inconsistencies

FAQs

1. How does Ling-1T compare to GPT-5 in code generation?

Ling-1T is generally more efficient and accurate in generating code while being open-source, making it easy for developers to use and customize.

2. Can Ling-1T be fine-tuned for specific tasks?

Yes, it supports parameter-efficient fine-tuning methods like LoRA, making customization possible for specific applications.

3. Is Ling-1T suitable for real-time applications?

Ling-1T is better suited for batch processing and reasoning tasks rather than instant decision-making.

4. Where can I download Ling-1T?

You can get it from Hugging Face or ModelScope, depending on your region.

5. What industries benefit the most from Ling-1T?

Education, finance, healthcare, and software development are some of the key sectors that can leverage Ling-1T effectively.

6.What makes Ling-1T different from other trillion-parameter models?

Ling-1T distinguishes itself through its Mixture-of-Experts architecture with only 50 billion active parameters per token, making it significantly more efficient than dense trillion-parameter models. The evolutionary chain-of-thought training methodology and open-source accessibility further set it apart from closed-source alternatives like GPT-5.

Conclusion

Ling-1T by Ant Group is a game-changer in AI, combining scale, reasoning power, and open-source accessibility. Whether you are a developer, researcher, or industry professional, Ling-1T offers tools and capabilities that were previously only available in proprietary models. With future enhancements on the horizon, this model is set to become a core part of AI innovation globally.

For more insights on AI models and tech updates, visit PreviewKart.